The Content Security Policy (CSP) defines restrictions for webviews to reduce the attack surface of applications for Cross Site Scripting (XSS) and other attacks. The stricter the policy is configured, the fewer possibilities remain for attackers to inject malicious functionality in case of input validation flaws. This is especially important for hybrid apps, such those build with Cordova, that use JavaScript to access granted OS functionality via a JavaScript bridge. If an attacker can inject own code to a hybrid app’s webview, he can alter the way how the app uses accessible data and sensors.

A strict CSP can prevent the execution of injected functionality by restricting the executable code fragments to resources that are less accessible to an attacker. However, strict restrictions also require alternative programming styles during app development, which sometimes are inconvenient or unknown to the developer. Additionally, some external libraries or other existing code may not work out of the box with restrictive CSP settings, increasing the pressure for using a less restrictive policy.

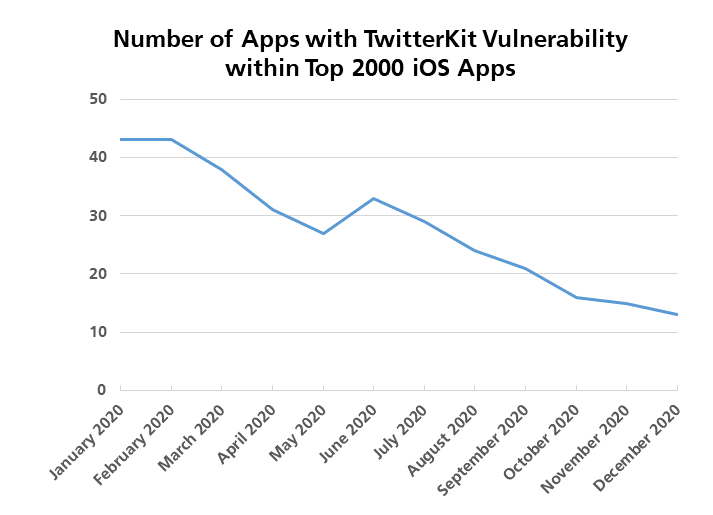

With Appicaptor we inspect apps for configured CSPs to evaluate the restrictions for the security of the app. A single missing sanitation of user input may render a hybrid app vulnerable for hijacking of the app’s functionality in a malicious way. As the vulnerabilities can be introduced to apps by a missing ‘S’ for the HTTPS scheme when loading external JavaScript resources or by a flawed sanitation of user input, hybrid apps should reduce these risks by applying a ready to use second line of defense using CSPs. However, 60.5% of the analyzed hybrid apps for iOS and 72.3% of the hybrid apps for Android do not define a CSP. The analyzed app set each consists of the most used 2,000 apps as ranked by the Android and iOS App Stores.

One might consider a ratio of more than 25% for hybrid apps that do use CSP as a second line of defense an already good starting point. However, analyzing the actual CSPs of these apps shows that many policies are weak. In iOS 39.5% (Android: 27.6%) of the hybrid apps with a CSP deactivate important protective restrictions and therefore could be rated equally to apps with no defined CSP from the perspective of an attacker. So let’s see why.

This is a typical basic example of an observed CSP:

<meta http-equiv="Content-Security-Policy" content="default-src 'self' data: 'unsafe-inline'; img-src *;">

CSPs are constructed in a simple fashion: Each section starts with the source keyword the directive should be applied to and is terminated with a semicolon. In the example the CSP starts a directive section with the default-src keyword. Its declared restrictions are used as fallback for other, not defined directives. For example, the directive for script-src and object-src are not declared, so the configuration of 'unsafe-inline' is applied to both of them, but not to 'img-src', as it is declared in the example and therefore the fallback to 'default-src' is not applied by the browser.

The intention here is to allow loading sources only from 'self', which is the source where the HTML page with the CSP entry was loaded from, to prevent injection of external scripts for Cross Site Scripting. Only images are allowed to be loaded from any source, declared by star character in the img-src directive.

However, by declaring the keyword 'unsafe-inline', this CSP allows executing JavaScript code injected at any place in an HTML page, such as

User entered <script>doSomethingBad();</script> text

or

<div onclick="doSomethingBad();">Click Me</div>

Allowing 'unsafe-inline' together with the data: scheme for the object-src in the example allows an attacker to inject script code to <object>, <embed>, and <applet> elements:

<object data="data:text/html,<script>doSomethingBad();</script>"></object>

or by using JavaScript inside an SVG, embed as data: URL:

<embed src="data:image/svg+xml,<svg onload='doSomethingBad();' xmlns='http://www.w3.org/2000/svg'></svg>" type="image/svg+xml" width="1" height="1" />

Fortunately, in most common cases the code injected via data: scheme is treated as a separate frame. It cannot interact with the JavaScript bridge of a hybrid app, which is located in the parent index.html page and the Same Origin Policy prevents the access to it. However, attackers can still use this for UI manipulation attacks by tricking users to disclose credentials or other critical data in crafted dialogs.

Besides many other possible shortcomings observed CSP are having regarding inline XSS protection, about 10% of the analyzed hybrid apps (iOS / Android) do not properly restrict the sources for loading scripts. This is caused by using the star character as a wildcard for the scheme. This way an attacker would be able to inject a script element that can load malicious code from any domain. The same applies if instead the scheme https: is used. It seems that this configuration option is too confusing for developers as for some it might look like as if this configuration would just prevent HTTP access. However, if used it allows access to any domain via HTTPS. So, when some developers use the https: together with a list of domain names they want to allow, this domain list does not have any effect, as any domains are already allowed:

default-src 'self' data: https: ssl.gstatic.com maps.google.com;

Such ineffective domain restrictions were observed in about 7% (iOS / Android) of the analyzed hybrid apps, which might give a false sense of security. Instead, the developer would need to specify the protocol together with the scheme (e.g. https://ssl.gstatic.com) to restrict access to listed domains and allow this access only via HTTPS.

Unfortunatly, in about 5% (iOS / Android) of the analyzed hybrid apps also parsing errors were detected that can prevent the intended protection. In general, such parsing errors could lead to more strict or less strict restrictions, depending on which part of the CSP is affected. However, as more strict policies that are accidentally created are more likely to cause functional issues than in the case of accidentally created less strict policies, the chance is much higher that those issues are detected in functional testing. For example, we observed parsing errors that prevent some or all directives from being applied. In all these cases, the errors lead to a less strict CSP, e.g. by omitting a good strict default-src directive that is now missing for a secure fallback for other non-specified directives.

CSPs can have a strong impact on app security. They are especially important for hybrid apps. The analysis results show that it is important to check if apps do use a CSP and that the CSP needs to be evaluated carefully. In case of doubt, developers should check the CSP with a free tool such as the Google CSP Evaluator to better understand the impact of the directives and to prevent parsing flaws.