How has cryptography quality of the top 2000 Android and iOS applications evolved over the past three years? We show an overview of used hashing functions and symmetric encryption algorithms now and then. The results indicate that the majority of apps still use insecure cryptography.

Cryptography algorithms are applicable in many use cases such as for example encryption, hashing, signing. Cryptography has been used since centuries, some cryptography algorithms have been proven to be easily breakable (under certain configurations or conditions) and should thus be avoided. It is not easy for a developer with little cryptographic background to choose secure algorithms and configurations from the plenitude of options. Cryptographic agility is the ability exchange (insecure) cryptography algorithms with secure ones in computer programs.

Analysis Environment & Apps

The analysis results are based on the Appicaptor analysis results of the top 2000 Android and iOS apps. Appicaptor analyzed the current versions of the top 2000 apps along with the three-year-old counterpart of the respective apps. The apps are grouped into top apps from the top 2000 list and business apps uploaded or requested by Appicaptor customers.

Used Hashing Functions

Hashing functions such as MD5 and its predecessors as well as SHA1 are long known to be insecure and prone to collision attacks. It is advised by NIST to move to more secure alternatives like SHA224 or up to SHA512.

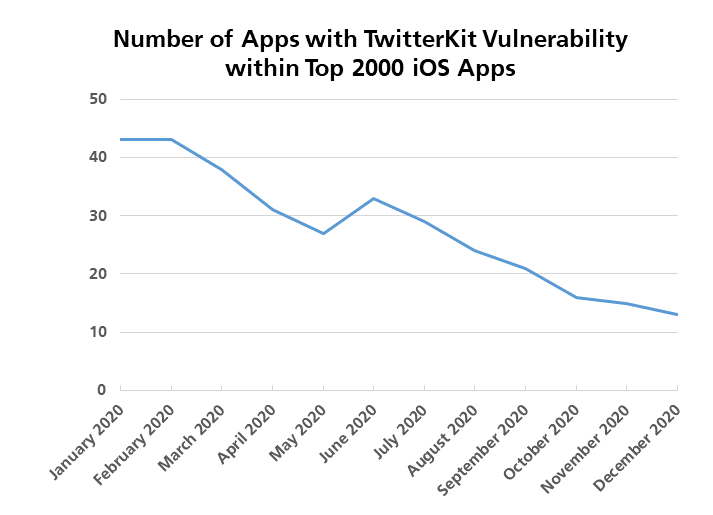

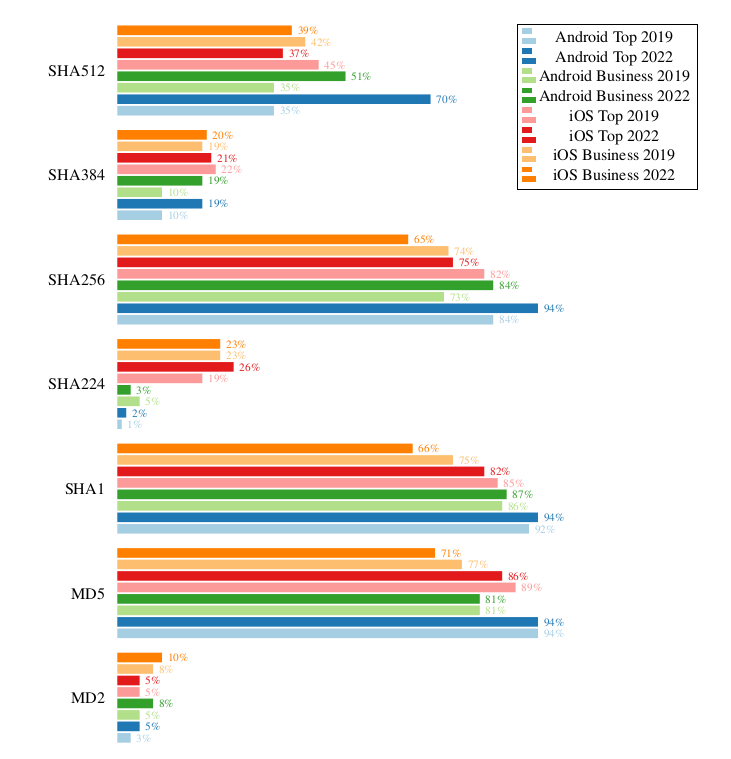

The used hashing functions in business apps and the top apps for iOS and Android were analyzed to see the current situation. Afterwards, the 2019 version of the apps is compared to the 2022 version to show the trend for cryptographic agility.

Surprisingly, outdated SHA1 and MD5 hashing is still found in 70% to 80% of the analyzed apps and thus the most used hashing algorithms in iOS and Android in both, the top and business app groups. Even the long outdated MD2 algorithm is still used in 5% to 10% of all apps. These are alarming news regarding security. SHA256 is the only used, yet secure algorithm which is as widespread as MD5 and SHA1.

Comparing hashing in top and business apps, one can see that business apps use less hashing functionality in general.

Looking at the evolution of the top apps on Android and iOS from 2019 to 2022, one can see that the usage of MD5 and SHA1 mostly remains constant with only slight variations. On Android, the SHA2 family and especially SHA512 usage increased. In the case of SHA512, the usage in apps doubled, which is at first sight a positive trend. However, since the usage of outdated algorithms remains constant, one must say, that only more hashing algorithms are used and secure algorithms are not replacing the outdated ones. On iOS, the situation is vice versa: The usage of the SHA2 family even declines which leads to the assumption that less hashing is used on iOS.

In conclusion, one can say that even though Android developers embracing the SHA2 family, outdated hashing functions constantly and heavily remain in Android and iOS apps.

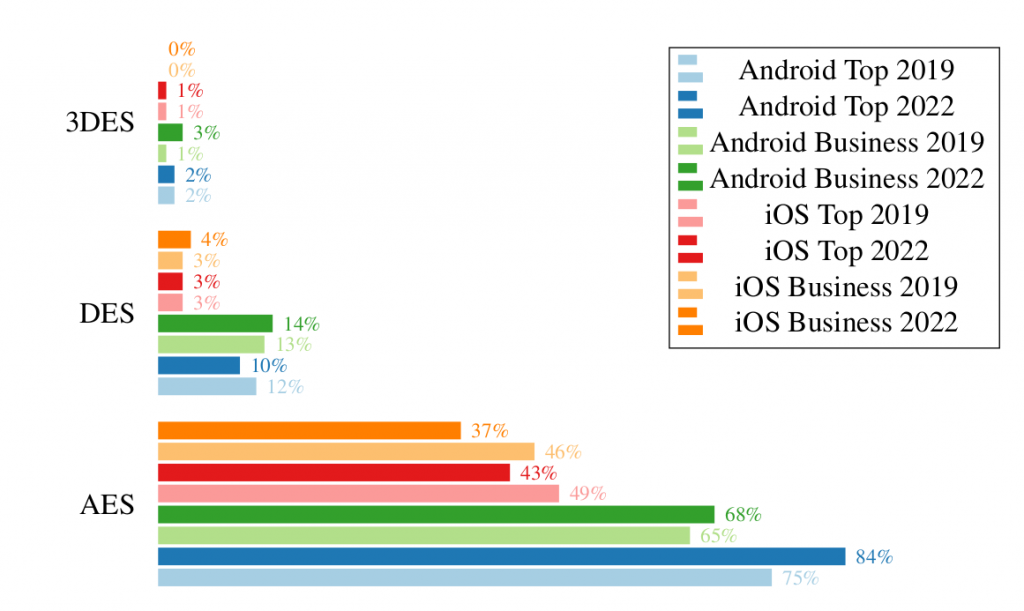

Used Symmetric Encryption Algorithms

As one would expect, AES is the most widely used symmetric encryption algorithm. DES and 3DES are used in around 3% of the analyzed apps. One exception is DES in Android which still seems very popular with a usage in around 12% of the tested apps. Throughout the years, DES and 3DES usage remains mostly constant. However, looking at AES usage over time, one can see that the usage in Android increases in the latest app versions, while at the same time the AES encryption in iOS decreases.

Especially on Android, a discrepancy between business and top apps becomes obvious. Business apps seem to use less AES and slightly more DES encryption.

Usage AES in Insecure ECB Mode

Usage of the ECB mode is a very common weakness when applying cryptography. ECB mode outputs the same ciphertext for the same plaintext (when the same key is used). This means that pattern are not hidden very well and one could draw conclusions on the plaintext. With other techniques like CBC or CTR mode, succeeding block’s encryption depend on one another, which introduces randomness and hides pattern.

We are aware that under certain conditions the usage of ECB mode is fine, but we advise against using it since secure conditions might easily become insecure during app upgrades, code restructuring or new requirements.

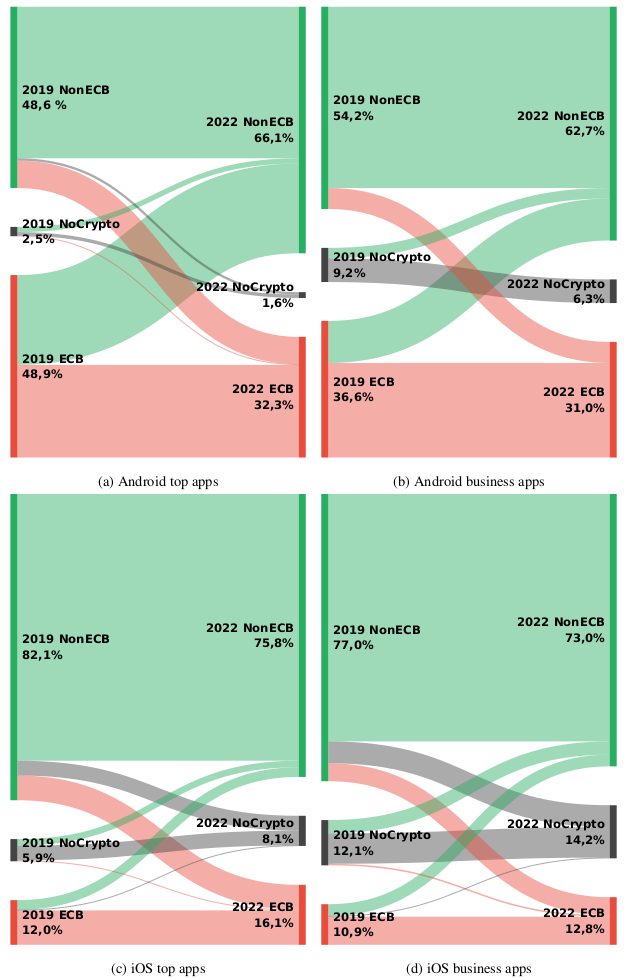

We visualize the usage of insecure ECB mode versus other modes. In the visualization we lay focus on the explicit transition of used secure and insecure modes from 2019 to 2022.

Looking at the transitions for Android top apps, we see that 48.9% ECB mode usage in 2019 shrinks to 32.3% in 2022, which is very positive. One can also see that many apps shift from ECB mode to other secure modes. However, a small percentage of apps used secure cryptography in 2019, are now using ECB in 2022.

The situation on iOS looks much different. The transition diagram shows that out of the top apps on iOS, only 12% use ECB mode in 2019 and the majority uses secure alternatives. However, after three years, things didn’t turn out well for iOS apps. With 16% for top apps and 12% on business apps in 2022, more apps are using insecure ECB mode compared to 2019. Even though numbers increased on iOS, ECB usage on Android is still far more widespread, but decreases.

Causes of ECB mode usage

From all observations, we find the transitions from secure (non-ECB) to insecure (ECB) cryptography and vice versa very interesting. Understanding reasons for the transitions could give hints on how developers could be lead towards better cryptography standards. The transitions from ECB to non-ECB and non-ECB to ECB is significantly strong in Android top apps.

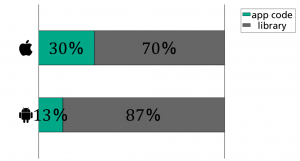

A more in-depth analysis of these apps reveals that in around 90% of the cases, the transition from ECB or towards ECB is triggered by an included third-party library. On iOS in 70% of the cases the transition is triggered through third-party libraries and in 30% of the cases through code changes of the app developer.

Android

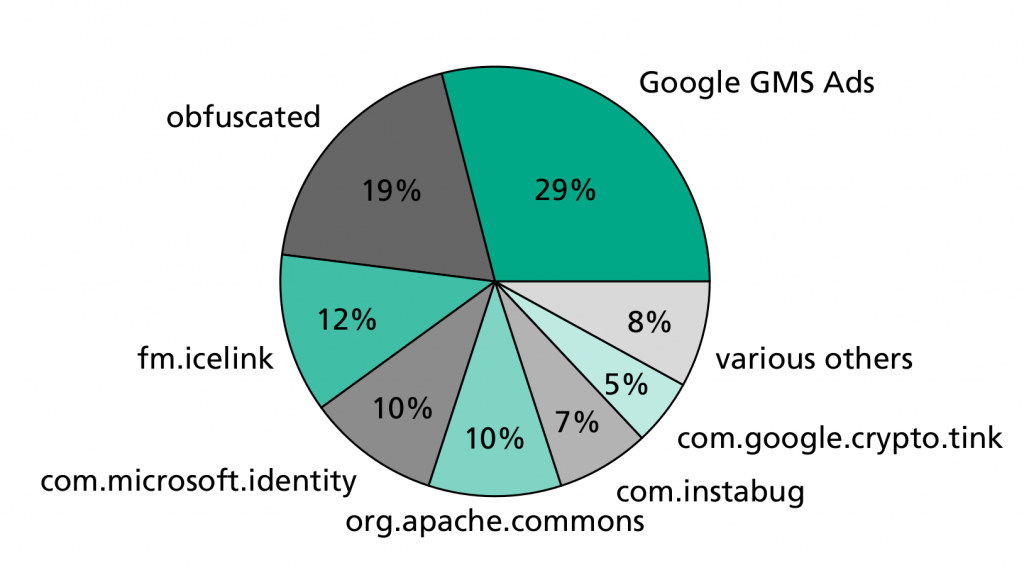

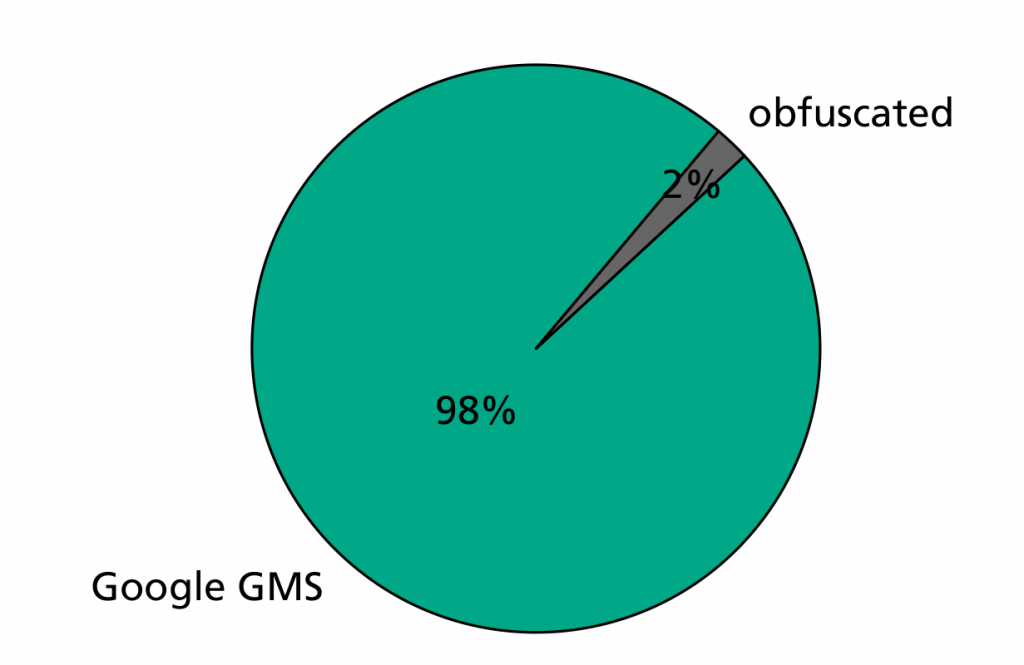

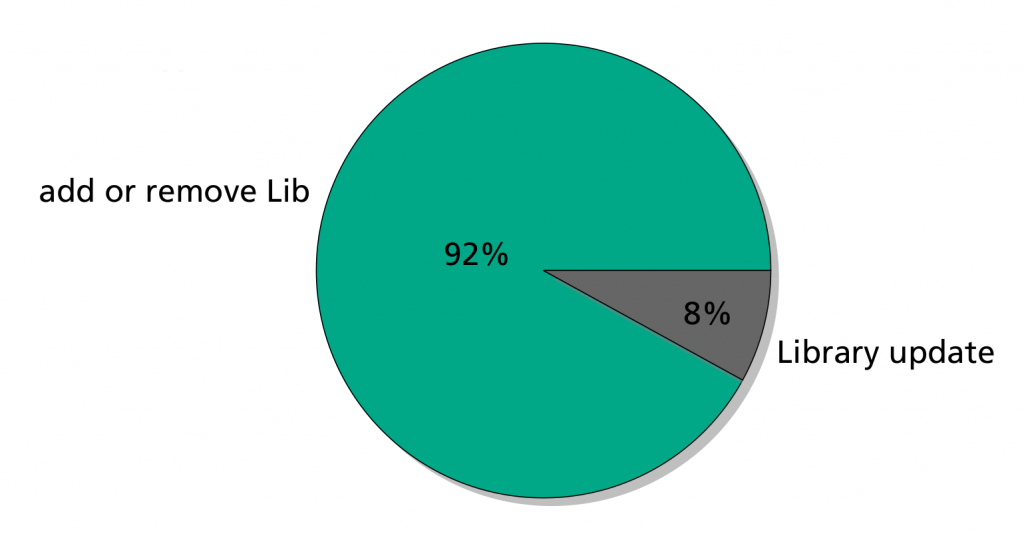

Different Android third-party libraries which cause the transition from an insecure to a secure cryptography mode and vice versa were analyzed. The transition from ECB to non-ECB is pretty clear, 98% of the apps discontinued using ECB due to not using Google GMS Advertisement library anymore. In 2% of the apps, the respective library was not identifiable due to obfuscation. The pie charts also show which libraries triggered the ECB usage in 2022 apps. Leading with 29% is Google GMS Advertisement library followed by Icelink (12%), Microsoft Identity (10%) and Apache Commons (10%). Respective apps were deeper analyzed, to see if ECB was introduced through a third-party library update or just by adding a new third-party library with ECB usage. In fact, in 92% of the cases libraries with ECB usage were added and only in 8% of the cases a third-party library update introduced ECB.

Conclusion

This analysis has proven that the majority of apps still use insecure cryptography. The trend over the past years unfortunately shows no significant drift towards secure algorithms on the broad front. Some single aspects like ECB usage on Android point into the right direction. The detailed analysis in finding causes of the ECB usage on Android showed that this flaw is mostly introduced through the usage of third-party libraries during app development.

Sources

The contents of this blog post is a condensed version of the award-winning paper published at the international ICISSP 2023 Conference. The full paper can be viewed at Scitepress.