Apple promotes MDM restrictions that should prevent data flows between managed and unmanaged apps. These restrictions can be bypassed easily and effortlessly using an app that is pre-installed on every new iOS device: the Shortcuts App. This is a finding of our practical tests on iOS 17.1 that should concern all enterprises that rely on these restrictions to use iOS devices in a compliant way with their enterprise policy for personal enabled usage.

Apple promotes the data flow control between managed and unmanaged apps as a solution for data separation between personal and corporate data, “to keep it protected from both attacks and user missteps“. To achieve this protection, many enterprises configure the following Mobile Device Management (MDM) restrictions for the managed iOS devices:

Managed pasteboard. In iOS 15 and iPadOS 15 or later, this restriction helps control the pasting of content between managed and unmanaged destinations. When the restrictions above are enforced, pasting of content is designed to respect the Managed Open In boundary between third-party or Apple apps like Calendar, Files, Mail, and Notes. And with this restriction, apps can’t request items from the pasteboard when the content crosses the managed boundary.

Allow documents from managed sources in unmanaged destinations. Enforcing this restriction helps prevent an organization’s managed sources and accounts from opening documents in a user’s personal destinations. This restriction could prevent a confidential email attachment in your organization’s managed mail account from being opened in any of the user’s personal apps.

(see Apple Document “Managing Devices and Corporate Data”, accessed October 9th, 2023)

With the first restriction, a user should not be able to paste the content of the pasteboard to an unmanaged app, if the content was copied from a managed app, or vice versa. The second restriction applies the same restriction to documents. And in our tests, iOS prevented us from performing such actions, after the MDM restrictions for the test devices have been activated.

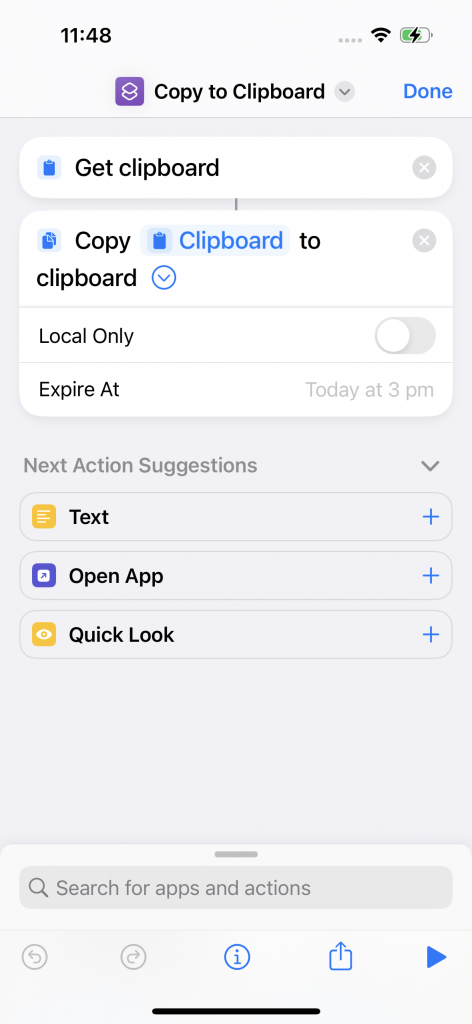

However, the iOS Shortcuts App, which is an integral part since iOS 13 and can be used to automate almost any task on iOS, can be abused to bypass such restrictions. The first shortcut (below on the left side) simply reads the clipboard and copies the content back to the global clipboard, which is then also synced with all other devices that the user has logged in with the same Apple account. When the user activates this shortcut, it strips of the information, that the original content was copied from a managed app and so iOS does no longer prevent the paste action to an unmanaged app. Each shortcut can also be combined with a trigger action that can automated the execution of it. So, the bypass of iOS MDM Restrictions can also be automated.

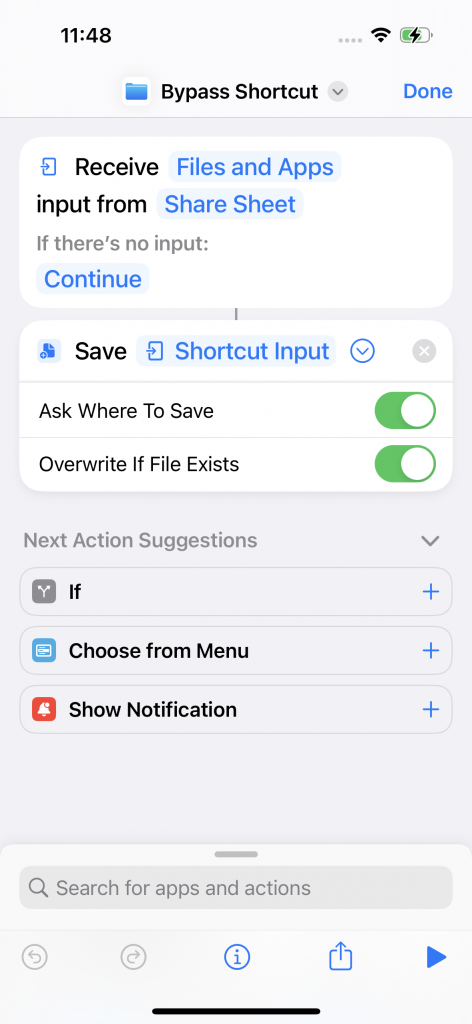

The second example shortcut uses the input of the share sheet to save the content of a document from a managed app. When using this shortcut in the share sheet, iOS prompts for name and location of the file and stores it without the information, that the content originated from a managed app. This way the document can be opened also from unmanaged apps. We created a video for both bypass methods to show the environment and the results in action. But these are only simple examples of the problem. There are many more possibilities to use actions of the Shortcuts App to bypass the iOS MDM restrictions.

Apparently, the Shortcuts App has privileges to access any data from managed apps, although it is not configured as a managed app through the MDM profile. This way the app can access the data, but when the data is saved by the Shortcuts App, it is saved as an unmanaged app. The fix therefore should be to keep the origin information of the content the Shortcuts app processes. This way the app could still access content from managed apps, but the processed content would be marked as originated from a managed app and this way the data separation would be kept intact.

We reported this flaw via Apple’s responsible disclosure process. However, the issue was rejected. We provided a detailed description, screenshots and videos, but also on a second try, where we explained again that the bypass circumvents an advertised security feature of iOS, it was not accepted. We also reached out to Apple via feedbackassistant.apple.com, but did not receive any answer in 2 months. As the current Shortcuts App version 6.1.2 is still vulnerable, we decided to publish the findings to warn enterprises that they cannot rely on iOS data flow restrictions.

A short-term solution would be to delete the iOS Shortcuts App from the devices and block the installation with the MDM blacklist via the bundle ID “com.apple.shortcuts” or to exclude devices with installed Shortcuts App from the domain as non-compliant, depending on the provided functionality of the MDM solution used. However, in the VMware Workspace ONE UEM (AirWatch) MDM tested, the Shortcuts App blacklisting did not prevent the installation nor was the device marked as non-compliant.

Without a proper blocking of the Shortcuts App, company data can currently be sent unintentionally in unmanaged apps through manually used or automated shortcuts and thus leave the company in an uncontrolled manner.

You can visit us on it-sa Expo&Congress, 10. – 12. October in Hall 6, Booth 210 for further details.