Third-party libraries are widely used in Android apps and take over some functionality, thus making app development easier. As these libraries inherit the privileges of the app, they can often be overprivileged. Libraries, can abuse these privileges, oftentimes through extensive data collection. This article delves into the issue of permission piggybacking, a technique where libraries probe permissions and adapt their behaviour accordingly, without making any permission requests of their own. We thoroughly analysed the top 1,000 applications on Google Play for permission piggybacking. Our results prove that it is extremely widespread, imposing a significant problem that needs urgent attention.

The Android operating system is home to millions of applications, each providing users with a unique set of features and services. To ensure that these applications interact safely with user data and other app components, Android employs a permission system. However, the reality is far from ideal. The main application often employs Third-party libraries to offload certain tasks and functionalities, which inherit all permissions from the main app. Mostly, these permissions are more than the library requires. Many libraries use this characteristic to probe already granted permissions and use or collect accordingly available data.

Understanding Permission Piggybacking

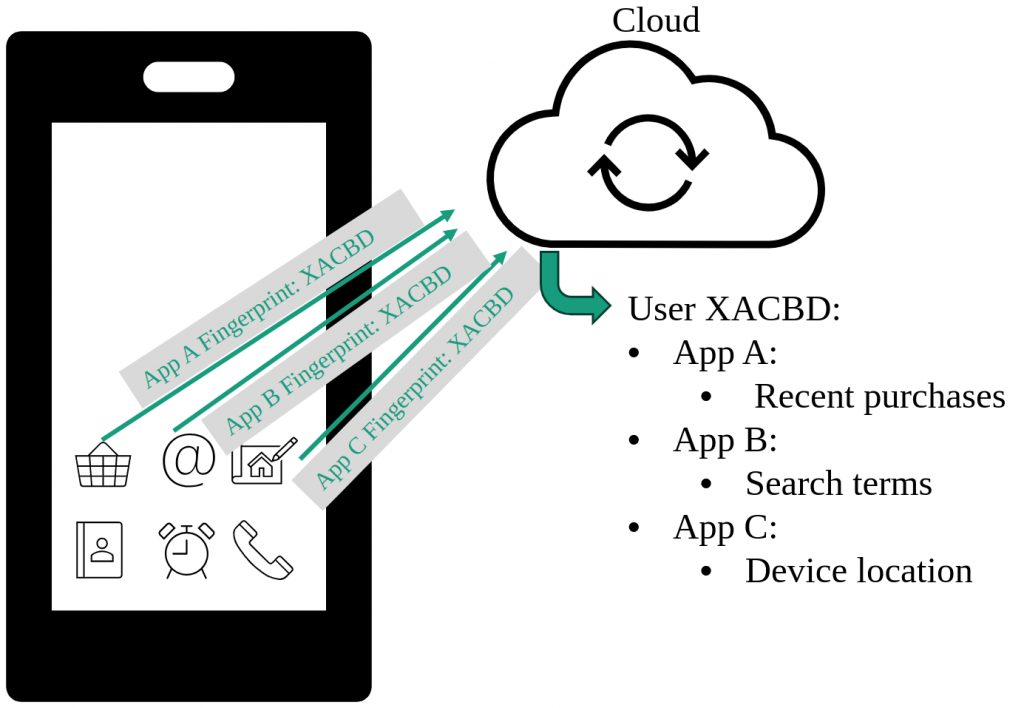

Permission piggybacking occurs when third-party libraries, integrated within a main app, probe and adapt their behavior according to the already granted permissions, without explicitly requesting any permissions of their own. Libraries utilizing this technique can access user data and critical functionalities, particularly when embedded in an application with high privileges.

Not just a few apps exhibit this issue. Most apps, from gaming apps to critical banking applications, employ libraries. Each app often uses five to ten, sometimes up to 50 libraries, and the app developer often does not know in detail how each library works and what it does in the background. This makes it a significant concern, as these libraries can gather more personal and sensitive user data than required for their primary functionality, posing a considerable privacy risk.

The Research Approach

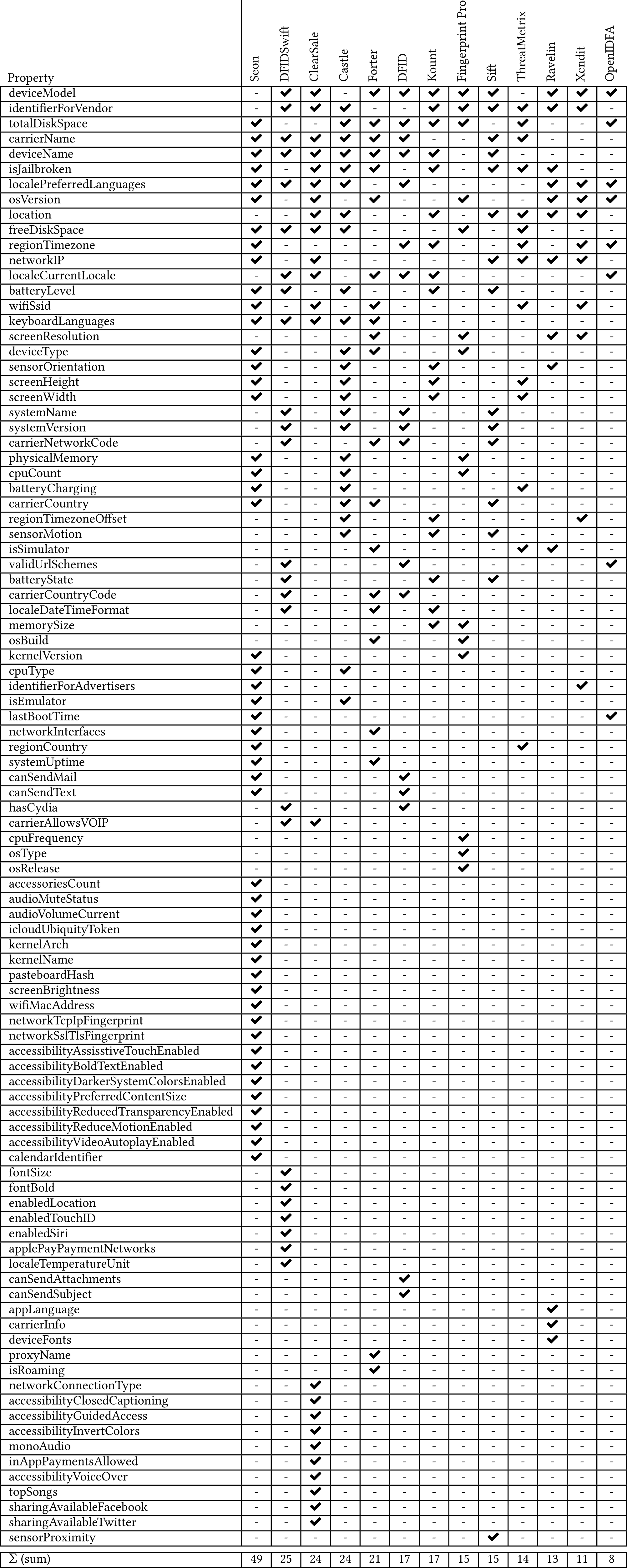

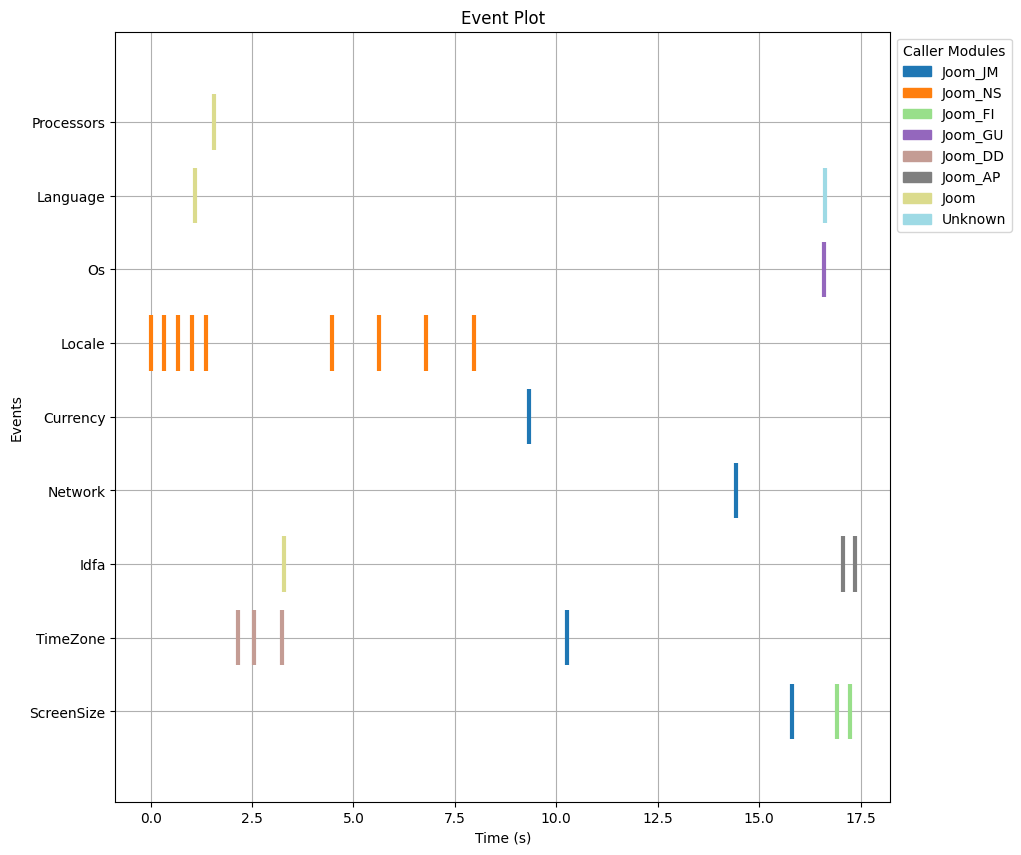

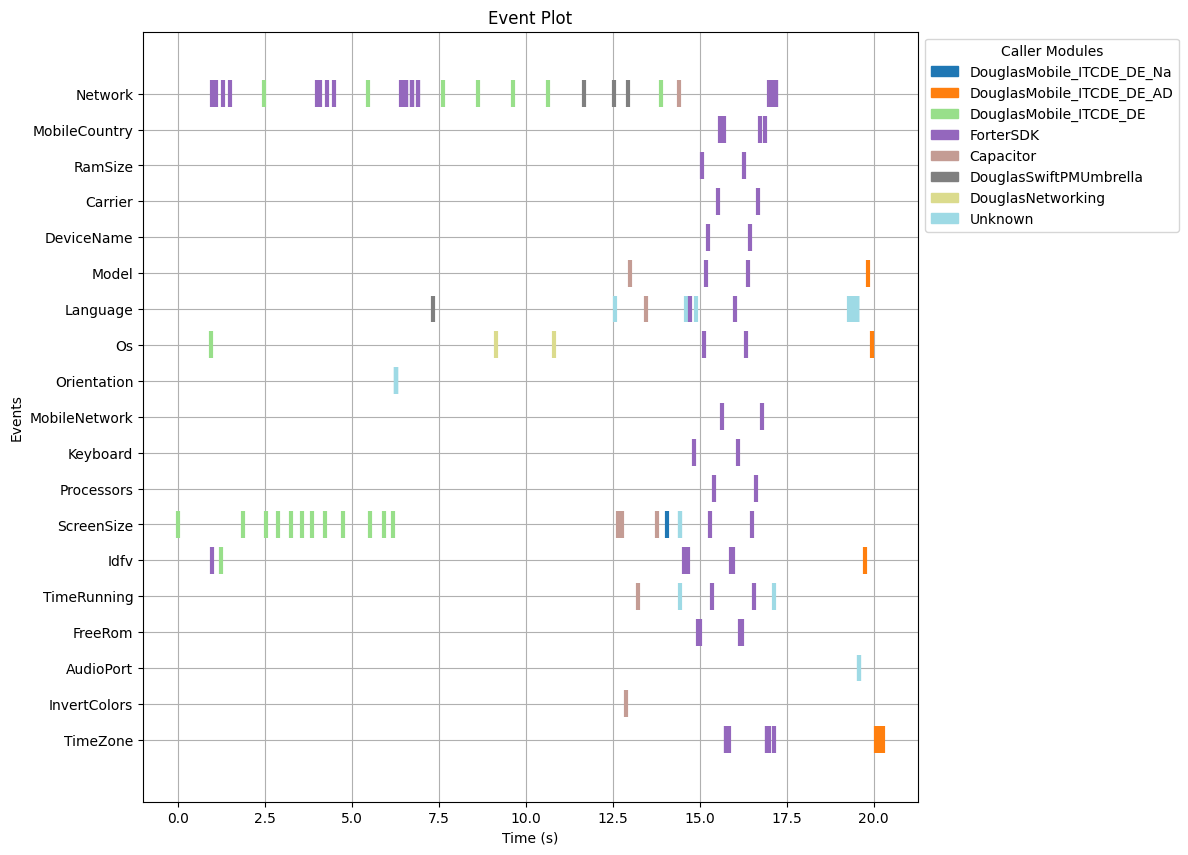

Our research aimed to assess the prevalence and impact of permission piggybacking in third-party libraries. To achieve this, we developed a novel analysis technique that can detect opportunistic permission usage by third-party libraries. Normal behaviour would be that if a library requires permission to a resource, it first checks if the permission was already granted. If not, the library would generate a permission pop-up to request the permission and then use the restricted resource. During permission piggybacking, the library only checks and uses the permissions already granted, but it never requests it.

In our approach, we used a static analysis to first search for check permissions API calls. Afterwards, we compare it with the permission request API calls. Finally we assign the different API calls to the main app or to the different integrated libraries. As previously described, we evaluated the checked and requested permission API calls on a per library basis. We flag a library as permission piggybacking whenever it checks more permissions than requested.

Evaluation Results

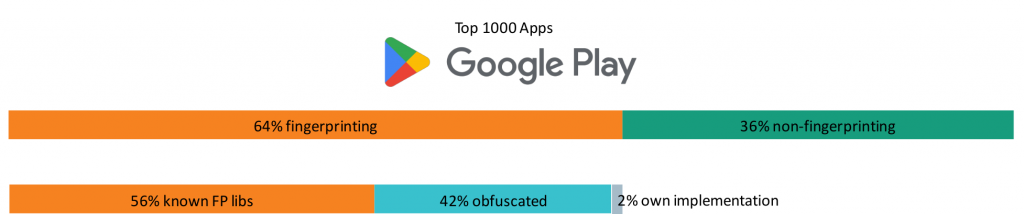

We then put this technique to the test by analysing the top 1,000 applications on Google Play. We aimed to measure the extent of opportunistic permission usage by third-party libraries and determine the prevalence of this technique.

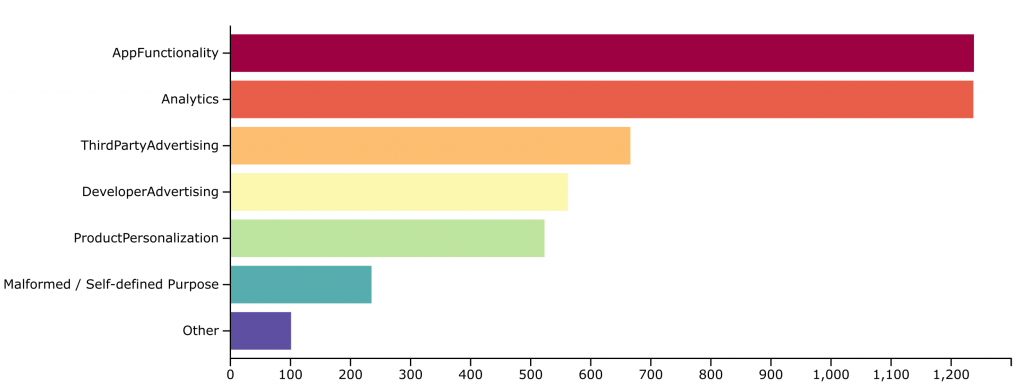

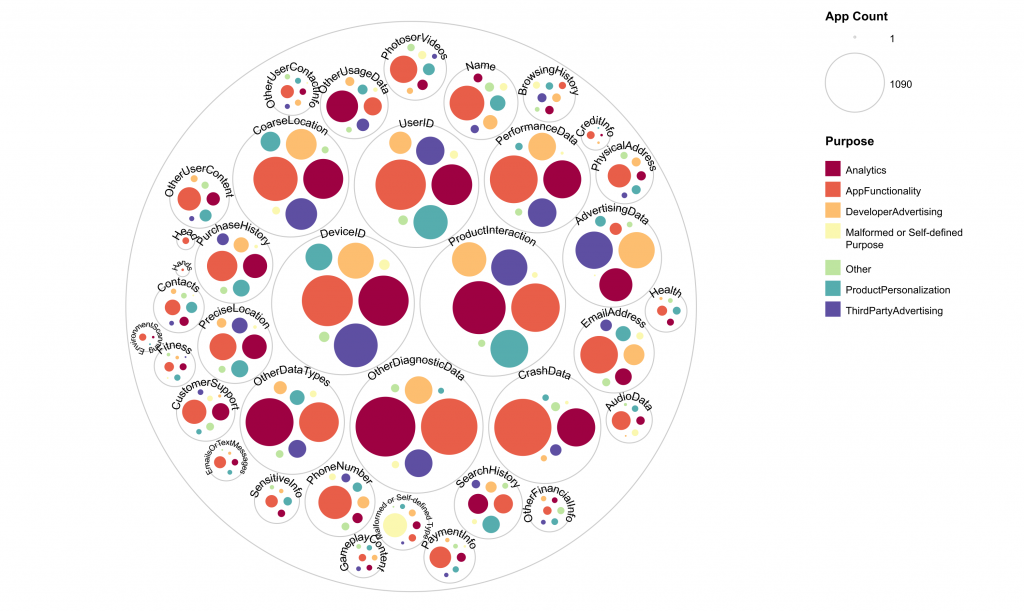

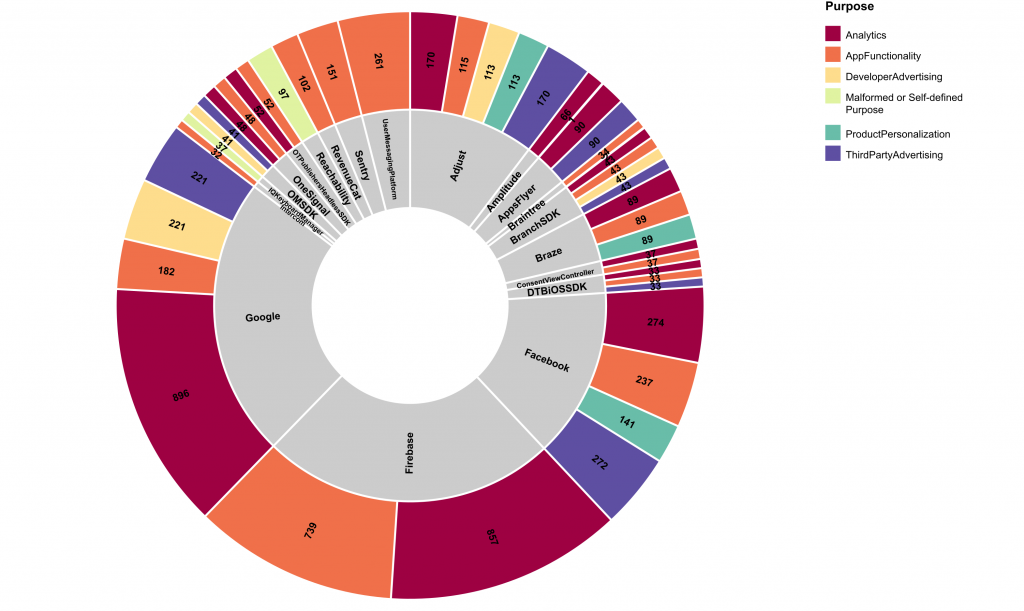

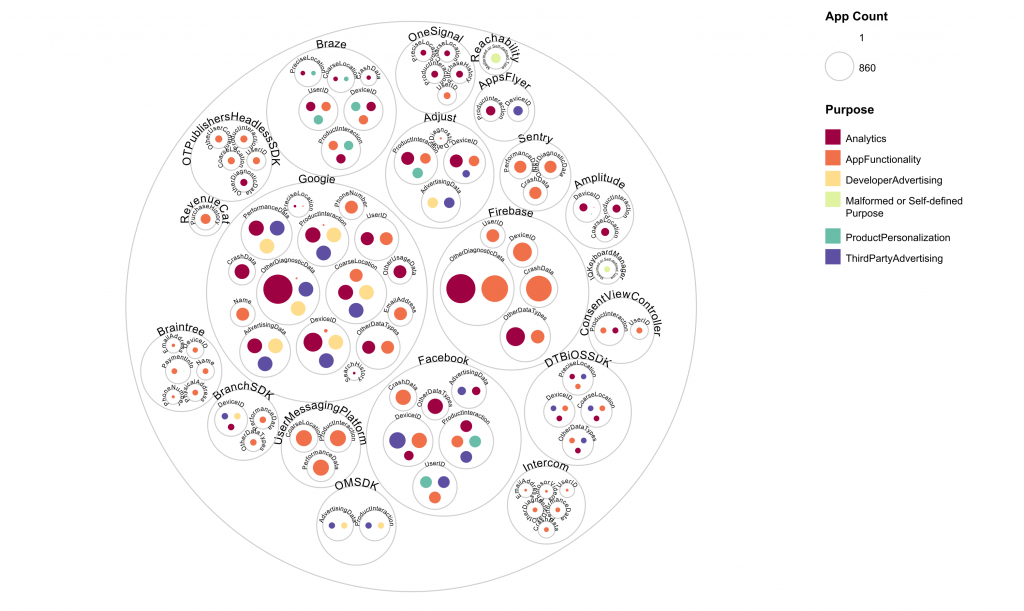

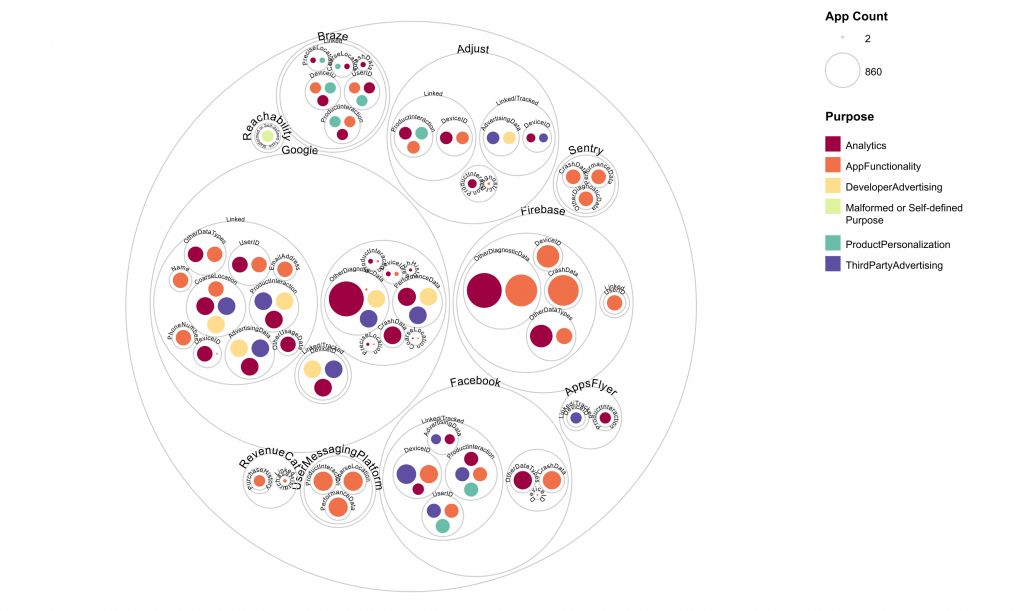

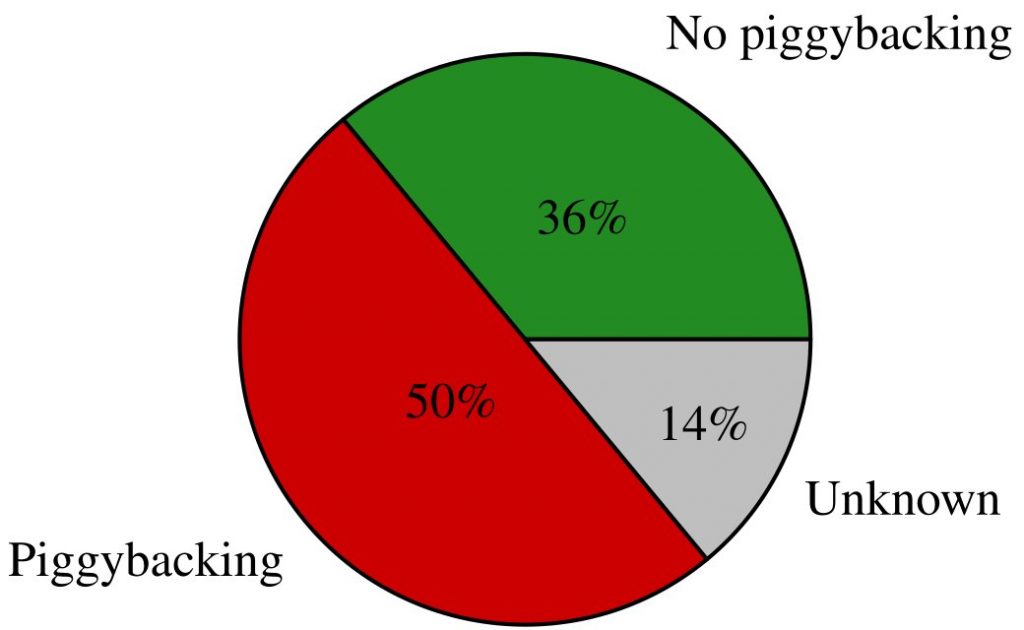

Out of the 1000 apps, we have extracted 851 different libraries. In 14% of the libraries we were unable to certainly determine if permission piggybacking is used due to the limits of the static analysis. However, we were able to determine that an overwhelming 50% of the 851 libraries use permission piggybacking.

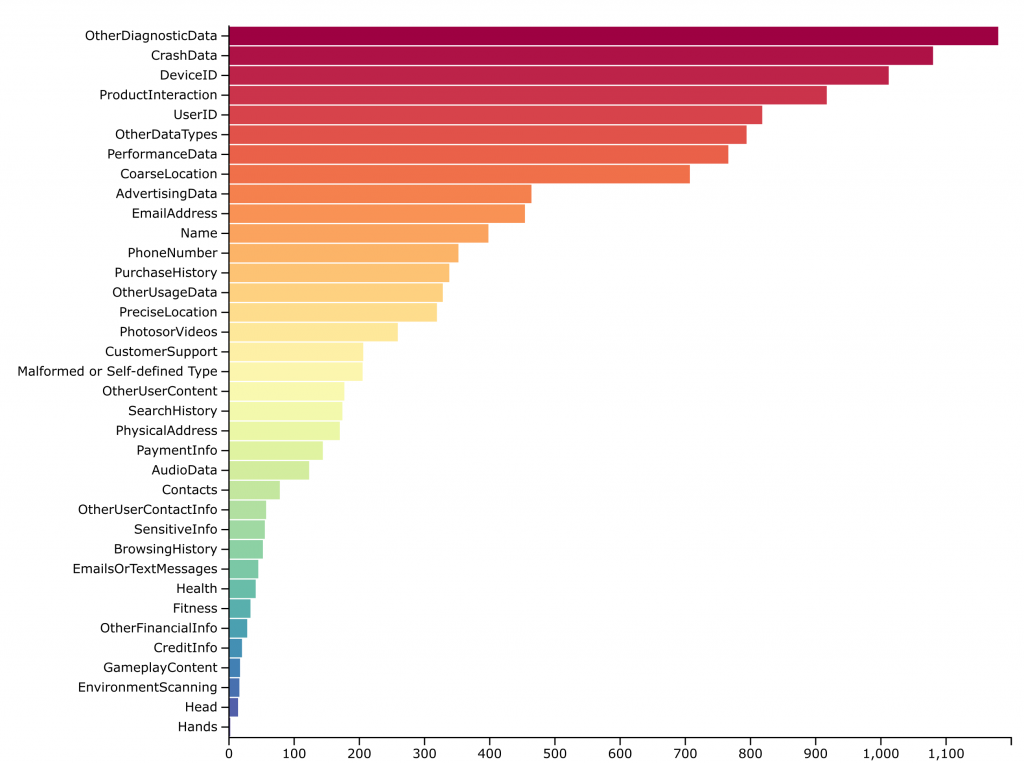

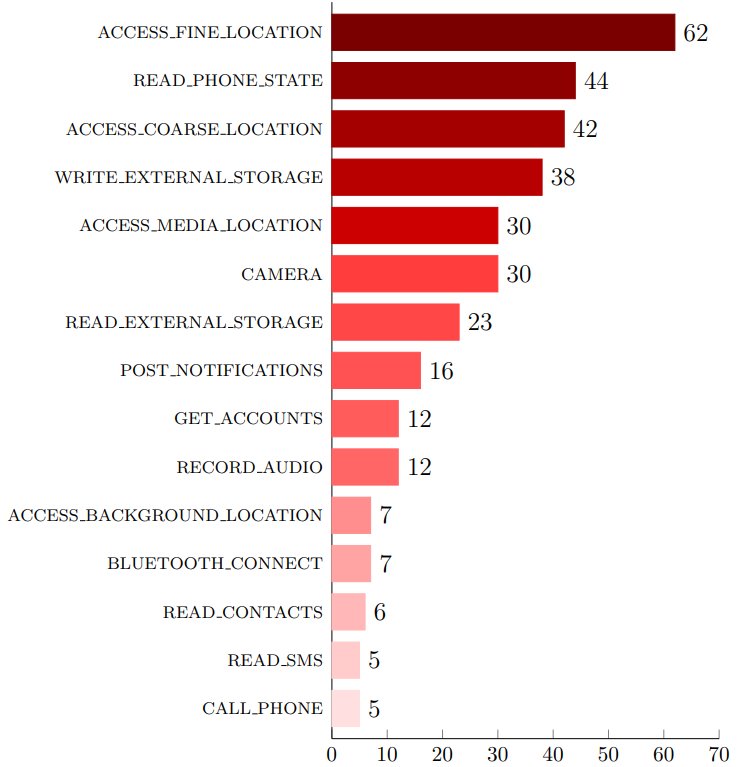

Interestingly, the permissions most often piggybacked were almost exclusively dangerous permissions as defined by Android documentation. Specifically, those were the fine and coarse location (GPS/mobile network cell) and read phone state. These permissions provide access to sensitive user data and make it possible to uniquely identify and track devices as described in our fingerprinting article.

Furthermore, our analysis revealed that most libraries engaging in permission piggybacking were related to advertising, usage statistics and tracking. These libraries, by their nature have strong interest in extensive data collection.

Further insights on our used detection mechanism can be read in our extensive paper at Scitepress, published at the ICISSP conference 2025. This paper was awarded as the best paper of this conference.

Conclusion and Outlook

Our research underscores that permission piggybacking remains a significant and widespread issue with 50% of all libraries leveraging this technique. Thus, in practice chances of having a piggybacking library installed are very high. To effectively address this, implementing a more granular permission system at the library level is being a viable solution.

As a result, users must be mindful of the permissions they grant to the apps they install until Google implements such measures in Android. Even though for example giving the location permission to a navigation app seems legit, advertisement, or usage statistics libraries integrated into the app potentially piggyback and abuse these permissions for data collection. Depending on the use case of an app, it might be also an option to manually provide the location to the app, e.g. by entering the town name for the weather app once instead of granting the location permission that allows all included libraries to track any location changes of the user.

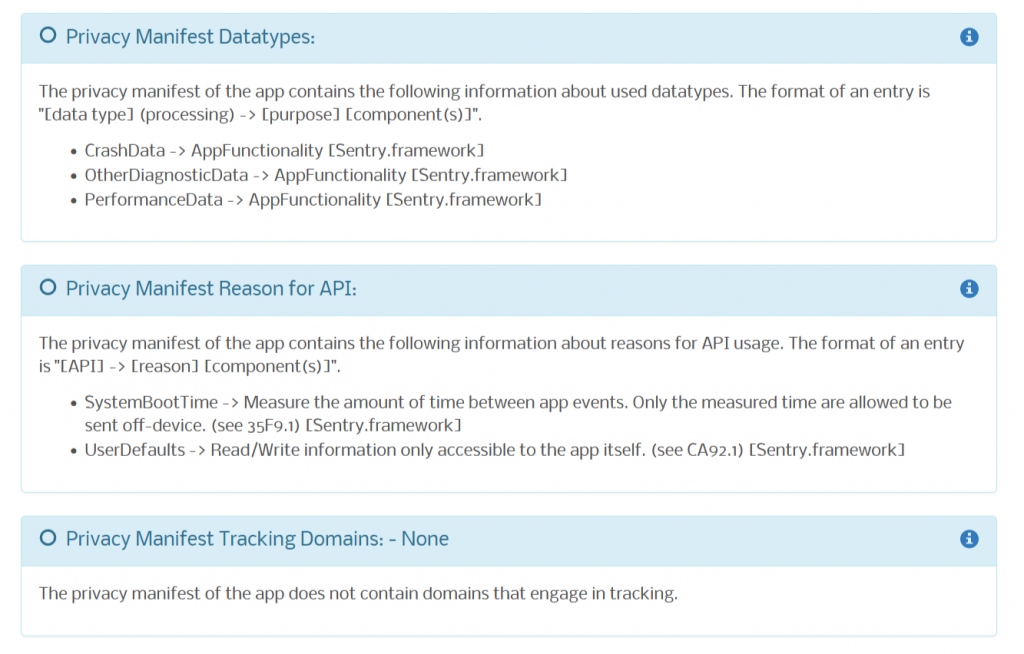

Appicaptor is already capable of analysing the possible types of accessed data, used permissions as well as integrated third-party libraries. Thus, Appicaptor results already pose a viable foundation for a user’s informed app selection. We are currently working on integrating the permission piggybacking detection approach into Appicaptor for our customers.