With Appicaptor, we analyze mobile apps with a strong focus on their IT security quality. We provide companies with well-founded assessments of potential risks. These analyses serve as an important decision-making foundation for IT departments and security managers. However, we also know that in practice, receiving complex and extensive analysis reports often leads to very specific follow-up questions. Customers want more details about particular analysis criteria, the relevance in their environment, or background information on specific security issues. Such details are mostly already documented, but sometimes can require some effort to find due to the report’s size.

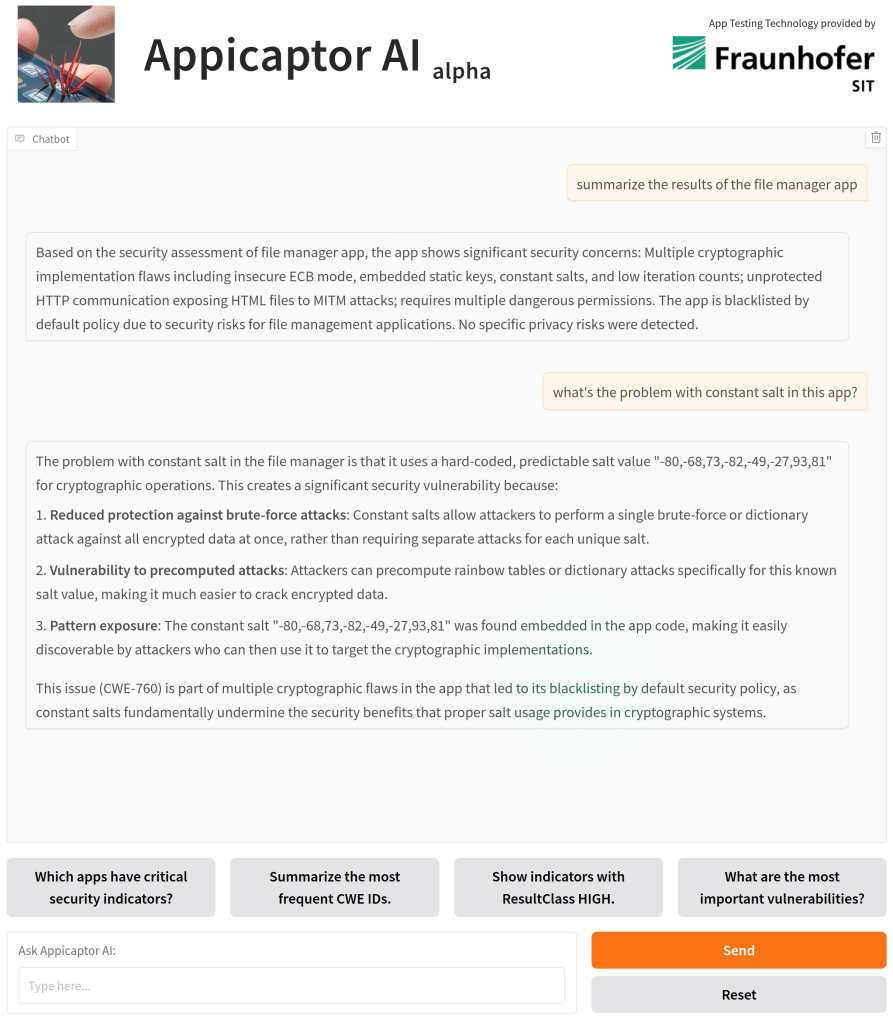

To lower the barrier and improving the understanding of result implications for users, in a first step we are currently researching the possibilities for an AI-powered follow-up system on app security reports, based on our current Appicaptor reports.

Technical Approach

The centerpiece of the new interactive capability is a Large Language Model (LLM) that acts as a conversational interface to our analysis data and IT security expertise. To achieve this, we are using domain-specific embeddings that enrich the LLM with the context it needs to provide precise, reliable answers. The embeddings are generated from three main knowledge sources: the analysis results of a customer’s individual app report, the structured descriptions of our evaluation criteria (covering aspects such as data protection, cryptographic usage, platform-specific guidelines or permission management), and specialized knowledge from the domain of mobile app security, consolidated from best practices, common vulnerabilities, and security standards.

By combining these data sources, the aim of our development is that the LLM can go beyond simple report summaries. Instead, thanks to the embedding approach, it retrieves relevant information from the curated knowledge base before responding. This minimizes the likelihood of incorrect outputs and ensures that responses stay consistent and precise. Through customer-specific data loading, privacy through the self-hosted LLM is guaranteed. Furthermore, data is not used for training nor could the LLM access content of other customers reports.

Added Value for Customers

With this development, we will reduce the effort required to understand complex security criteria. Instead of issuing additional requests or conducting further research, customers then can instantly receive tailored explanations. Responses are based on their customized report data, combined with industry knowledge. This saves time, improves transparency, and creates confidence in decision-making about which apps to approve or restrict.

Outlook – Preview at it-sa in Nuremberg

Although this development is still underway, we want our customers and interested visitors to experience it and get early feedback. That’s why we will showcase a preliminary version of the AI-powered follow-up system at it-sa in Nuremberg from October 7th to October 9th, 2025. You can find us at Hall 6, Booth 416. Visitors will be able to explore the system live and see firsthand the benefits of interactive app security AI reports.